Applied analysis of Bayesian inverse problems

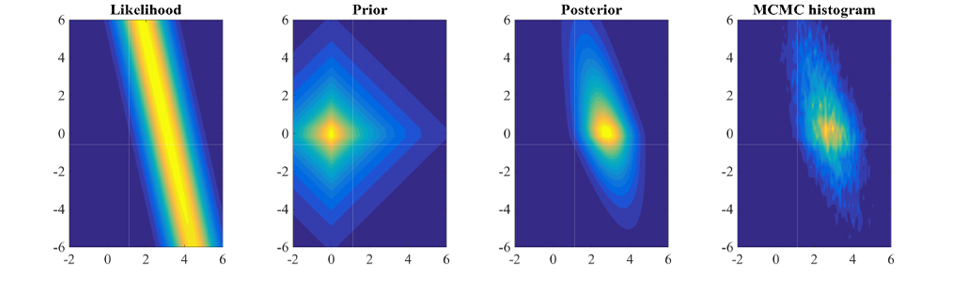

During the last few decades the Bayesian methodology has attracted a lot of attention in the inverse problems community (see the article by Stuart or the book by Kaipio and Somersalo for an introduction). The goal of this approach is to infer an unknown parameter from a set of noisy indirect measurements. We start by identifying a forward map that models the measurements as well as a prior probability measure that models our prior knowledge of the parameter. We then combine the forward map and the prior measure to obtain a posterior probability measure that is regarded as the solution to the inverse problem.

A particularly challenging setting for Bayesian inverse problems is when the parameter belongs to an infinite dimensional Banach space. This is the case in inverse problems where the forward map involves the solution of a partial differential equation (PDE). A key question in infinite dimensional Bayesian inverse problems is that of well-posedness: Is the solution to the inverse problem well-defined and does it depend continuously on the data?

I am interested in the issue of well-posedness when the prior measure is not Gaussian and has heavy-tails. I have studied the cases of non-Gaussian priors with exponential tails and infinitely divisible priors. For more information see the following articles: Link 1 and Link 2.

Compressible priors

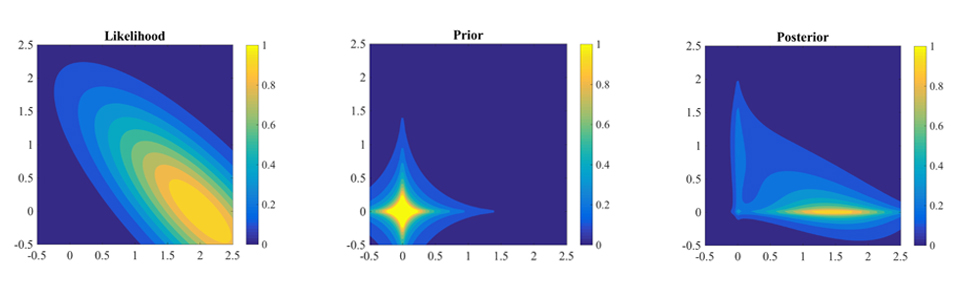

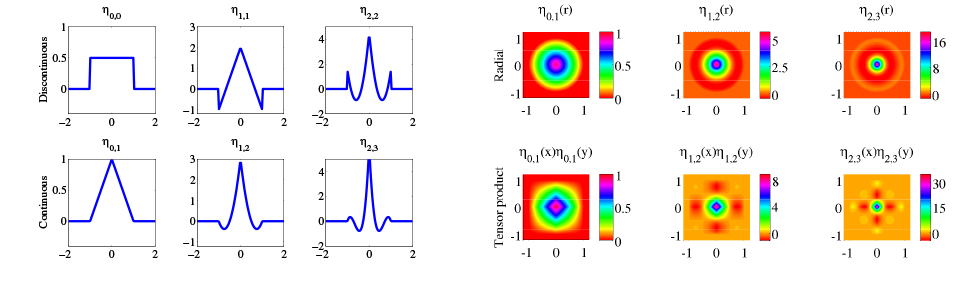

Estimation of sparse parameters is a central problem in different areas such as compressive sensing, inverse problems and statistics and has wide applications in image compression, medical and astronomical imaging and machine learning. I am interested in the case where the compressible parameter of interest belongs to an infinite dimensional Banach or Hilbert space. My goal is to develop a framework for estimation of compressible parameters as well as the uncertainties that are associated with the estimated values.

I am focusing on the choice of appropriate prior measures that can model compressible parameters. In the manuscript "Well-posed Bayesian inverse problems with infinitely divisible and heavy-tailed priors measures". I studied the theoretical aspects of Bayesian inverse with heavy-tailed priors and laid the groundwork for the analysis of Bayesian inverse problems with compressible priors. I also introduced an instance of a class prior measures to model compressibility.

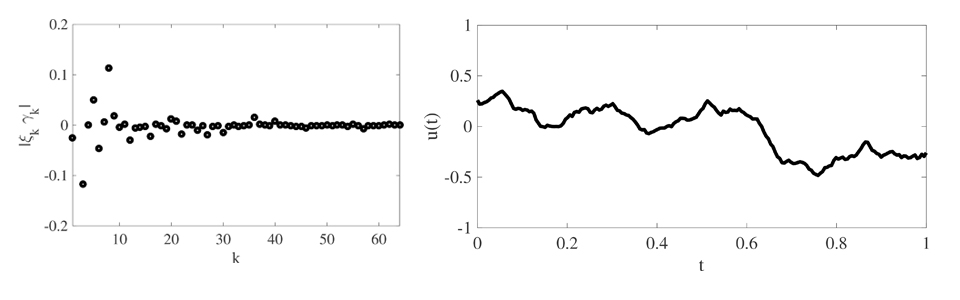

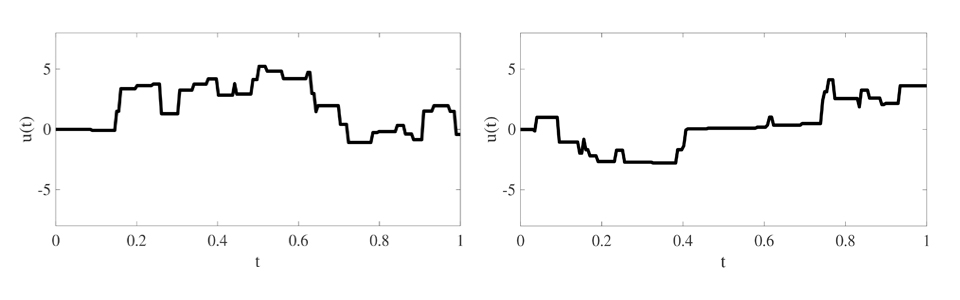

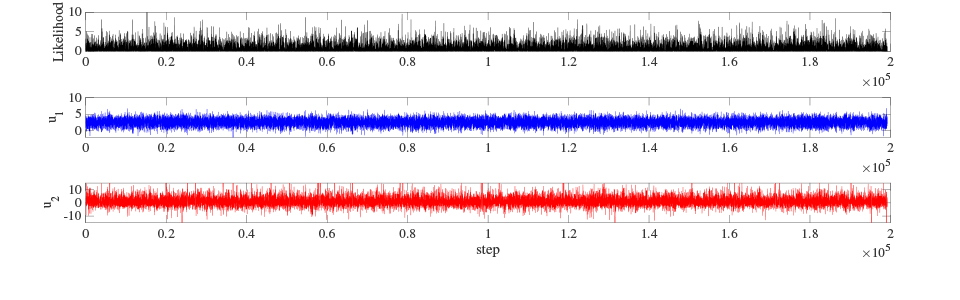

MCMC for non-Gaussian priors

The main challenge in practical applications of the Bayesian methodology for parameter estimation is the extraction of information from the posterior probability measure. The main workhorse of the Bayesian framework in this context is the Markov Chain Monte Carlo (MCMC) method. I am interested in infinite dimensional problems which, in practice are approximated by a high dimensional problem that results from discretization. Furthermore, in some cases the priors that are used in the inverse problem are highly non-Gaussian (such as the compressible priors above). Due to these attributes, most conventional MCMC algorithms become highly inefficient in sampling from posteriors that arise from non-Gaussian priors.

Recently, Cotter et al. introduced several classes of MCMC algorithms that are reversible in infinite-dimensions but rely on the assumption that the prior measure is a Gaussian. I am interested in the design of algorithms that can sample from self-decomposable prior measures and are reversible in infinite dimensions. A particularly interesting case of such prior measures is the Besov prior of Lassas et al. that is closely related to l1 regularization of the wavelet coefficients in compressed sensing.

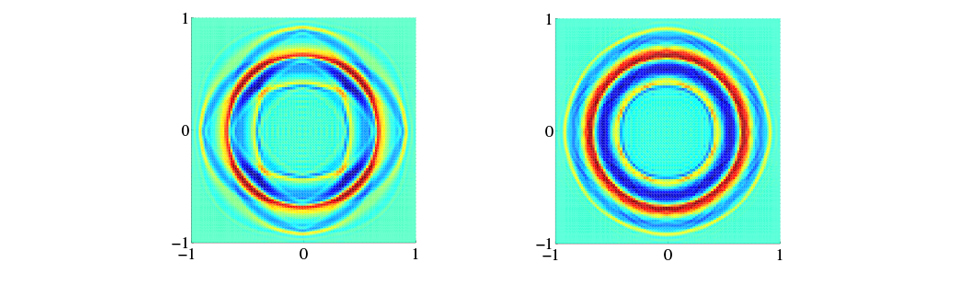

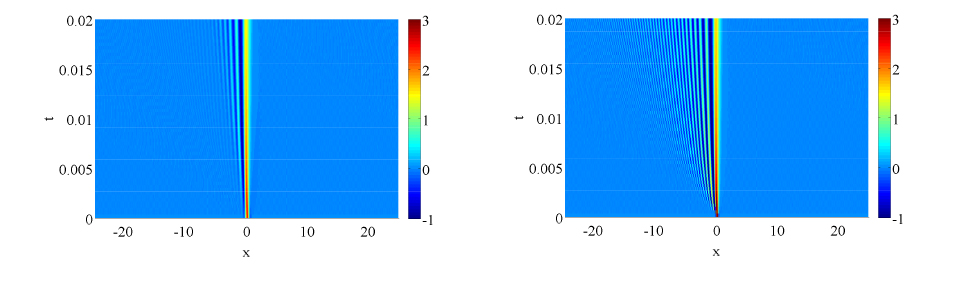

Regularization of singular source terms

I am interested in consistent regularizations of singular source terms for the numerical solution of PDEs. This project is motivated by the need to regularize singular source terms in a range of applications such as the immersed boundary and level set methods. Previously, I studied the smooth regularizations of the delta Distribution (link to article). I introduced a general framework for construction of such regularizations and studied their convergence to the Dirac delta distribution in different topologies. Currently I am working on extending those results to the case of sources that are supported on a manifold such as line sources.