Artificial Intelligence (AI) has become an ubiquitous presence in our lives—there are few industries or sectors that are untouched from its implications. Post-secondary education is no exception; AI can be used a tool in student admissions, recruitment, data analytics, and learning assessment to lessen the administrative burden.

It seems that through computation and mathematics, AI can equal or even surpass the decision-making abilities of humans. But the data it relies upon is ultimately provided by humans, and is subject to faults and biases. With this, we are presented with a dilemma: is it ethical to trust AI?

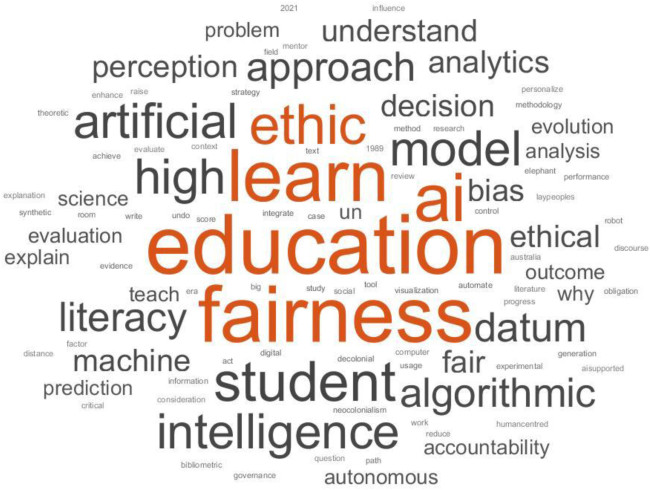

Simon Fraser University (SFU) researchers Tenzin Doleck and Bahar Memarian investigated how notions of Fairness, Accountability, Transparency, and Ethics—or FATE—are identified in AI relating to its use in higher education.

Doleck is a professor in SFU’s Faculty of Education, an associate member of the School of Computing Science, and Canada Research Chair (CRC) in Analytics for Learning Design. As CRC, Doleck is developing a learning environment and software tool known as DaTu to enhance post-secondary education for data science students.

Memarian has a background in engineering and is currently a postdoctoral fellow at SFU. She is an interdisciplinary researcher and educator with more than ten years of research and teaching experience at the intersection of applied and social sciences (e.g., human factors engineering, education, engineering education). She has designed and executed research projects as both a team leader and a member, and has an interest in research collaborations on topics that are significant for today’s society.

Memarian and Doleck’s paper, Fairness, Accountability, Transparency, and Ethics (FATE) in Artificial Intelligence (AI) and higher education: A systematic review, is the first study of its kind to examine the literature around issues of AI in higher ed.

We spoke to Doleck and Memarian about their findings.

Based on your research is it ethical to use Artificial Intelligence (AI) in higher education? What more needs to be done to ensure AI in education meets the FATE categories?

The ability of AI applications and algorithms to be used in education has generated mixed opinions. Educators and subcultures feel it can revolutionize the existing educational praxis but are concerned that it may come with unforeseen and destructive drawbacks. This is particularly difficult because notions of Fairness, Accountability, Transparency and Ethics are often socially constructed and thus subjective. Following the emerging perspective of giving equal rights or responsibilities to AI, we find that more research and discussions are needed on what it takes to teach AI and help it learn to strive for academic good and not misconduct.

Can you explain algorithmic bias and why is it a concern?

Algorithmic bias more generally refers to situations where an algorithm delivers systematically prejudiced results because of faulty assumptions in the machine learning process. Just like social bias, we find errors that create unjust outcomes, specifically for vulnerable demographic groups. What makes algorithmic bias potentially worse is the possibility of making errors more systematic, continuous and implicit.

What advice do you give professors on adapting to the use of AI in their classrooms? Do you encourage the use of certain programs—Grammarly or ChatGPT for example—or using disclosure statements when AI is used to generate answers?

We find the advice to be diverse depending on the use goals and cases. Generally, it is good practice to acknowledge when, where and for what purposes AI is used in teaching and learning. We find an underlying factor that needs to be addressed by users is the “why” of employing AI. Tools such as Grammarly may serve a specific and constrained purpose. The use of AI is questionable when it is not constrained and can take over all the tasks and responsibilities of humans in the educational system—in which case academic integrity is not maintained.

This work was supported by the Canada Research Chair Program and the Canada Foundation for Innovation.

SFU's Scholarly Impact of the Week series does not reflect the opinions or viewpoints of the university, but those of the scholars. The timing of articles in the series is chosen weeks or months in advance, based on a published set of criteria. Any correspondence with university or world events at the time of publication is purely coincidental.

For more information, please see SFU's Code of Faculty Ethics and Responsibilities and the statement on academic freedom.